PhD Student at the Georgia Institute of Technology

PhD Student at the Georgia Institute of TechnologyMy name is Jorge Quesada. I am a PhD candidate in the School of Electrical and Computer Engineering at the Georgia Institute of Technology. I work in the OLIVES Lab with Professor Ghassan AlRegib on machine learning for scientific imaging, where my current is focus on seismic interpretation.

My current work at the OLIVES lab centers on building self-supervised and domain-robust representation learning methods and stress-testing them under real distribution shift. In past projects, I have also explored human-in-the-loop systems, such as uncertainty-aware annotations that capture labeler expertise and prompting strategies for the Segment Anything Model in order to make workflows more reliable and interpretable in practice.

Beyond these, I have also worked in representation learning for neuroimaging, as well as inverse problems and mathematical optimization. I’m broadly motivated by questions at the intersection of natural and artificial intelligence—how brains and machines perceive, learn, and generalize—and I’m always excited to collaborate across domains.

Resume

Warning

Problem: The current name of your GitHub Pages repository ("Solution: Please consider renaming the repository to "

http://".

However, if the current repository name is intended, you can ignore this message by removing "{% include widgets/debug_repo_name.html %}" in index.html.

Action required

Problem: The current root path of this site is "baseurl ("_config.yml.

Solution: Please set the

baseurl in _config.yml to "Education

-

Georgia Institute of TechnologyDepartment of Electrical and Computer Engineering

Georgia Institute of TechnologyDepartment of Electrical and Computer Engineering

Ph.D. Student in Machine LearningAug 2021 - present -

Pontifical Catholic University of PeruM.S.. in Signal Processinggraduation 2018

Pontifical Catholic University of PeruM.S.. in Signal Processinggraduation 2018 -

Pontifical Catholic University of PeruB.S. in Electrical Engineeringgraduation 2015

Pontifical Catholic University of PeruB.S. in Electrical Engineeringgraduation 2015

Experience

-

Georgia Institute of TechnologyGraduate Research AssistantAug. 2021 - present

Georgia Institute of TechnologyGraduate Research AssistantAug. 2021 - present -

SentinelComputer Vision Data ScientistJul 2020 - Dec. 2020

SentinelComputer Vision Data ScientistJul 2020 - Dec. 2020 -

Pontifical Catholic University of PeruGraduate Researcher and LecturerMar 2016 - Jun. 2020

Pontifical Catholic University of PeruGraduate Researcher and LecturerMar 2016 - Jun. 2020 -

Los Alamos National LaboratoryResearch InternJan. 2017 - Apr. 2017

Los Alamos National LaboratoryResearch InternJan. 2017 - Apr. 2017 -

Jicamarca Radio ObservatoryResearch AssistantJan. 2015 - Apr. 2015

Jicamarca Radio ObservatoryResearch AssistantJan. 2015 - Apr. 2015

Teaching

-

Generative and Geometric Deep Learning ECE 8803Course Developer and Graduate Teaching AssistantFall 2023

Generative and Geometric Deep Learning ECE 8803Course Developer and Graduate Teaching AssistantFall 2023 -

Fundamentals of Machine Learning ECE 4252/8803Graduate Teaching AssistantSpring 2024

Fundamentals of Machine Learning ECE 4252/8803Graduate Teaching AssistantSpring 2024 -

Senior Analog Laboratory ECE 4043Graduate Teaching AssistantSpring/Summer 2023

Senior Analog Laboratory ECE 4043Graduate Teaching AssistantSpring/Summer 2023 -

Digital Signal ProcessingMain InstructorSpring/Fall 2019

Digital Signal ProcessingMain InstructorSpring/Fall 2019

Honors & Awards

-

Cadence Diversity in Technology Scholarship Recipient2023

-

Computational Neural Engineering Training Program (CNTP) Scholar2021

-

ICASSP 2018 Student Travel Grant2018

-

Marco Polo Scholarship2016

-

ICASSP 2016 Student Travel Grant2016

Selected Publications (view all )

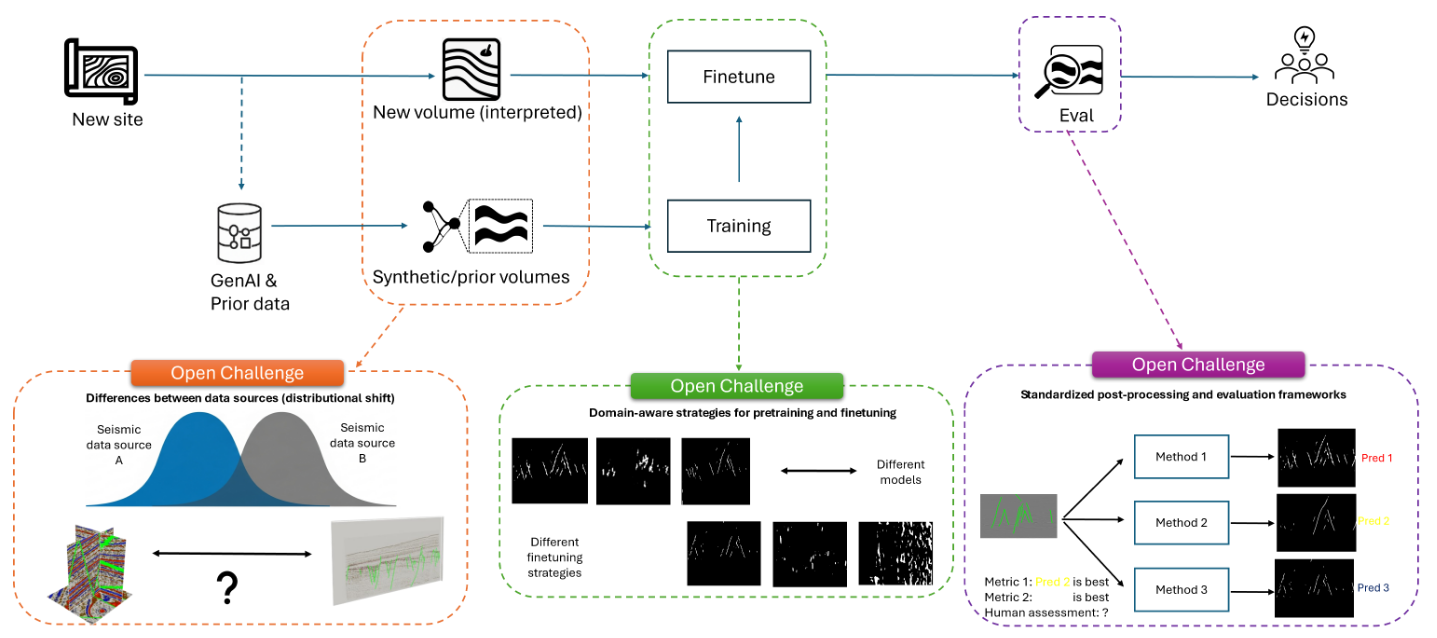

A Large-scale Benchmark on Geological Fault Delineation Models: Domain Shift, Training Dynamics, Generalizability, Evaluation and Inferential Behavior

Jorge Quesada, Chen Zhou, Prithwijit Chowdhury, Mohammad Alotaibi, Ahmad Mustafa, Yusuf Kumakov, Mohit Prabhushankar, Ghassan AlRegib

Submitted to IEEE Access 2025

We present the first large-scale benchmarking study for geological fault delineation. The benchmark evaluates over 200 model–dataset–strategy combinations under varying domain shift conditions, providing new insights into generalizability, training dynamics, and evaluation practices in seismic interpretation.

A Large-scale Benchmark on Geological Fault Delineation Models: Domain Shift, Training Dynamics, Generalizability, Evaluation and Inferential Behavior

Jorge Quesada, Chen Zhou, Prithwijit Chowdhury, Mohammad Alotaibi, Ahmad Mustafa, Yusuf Kumakov, Mohit Prabhushankar, Ghassan AlRegib

Submitted to IEEE Access 2025

We present the first large-scale benchmarking study for geological fault delineation. The benchmark evaluates over 200 model–dataset–strategy combinations under varying domain shift conditions, providing new insights into generalizability, training dynamics, and evaluation practices in seismic interpretation.

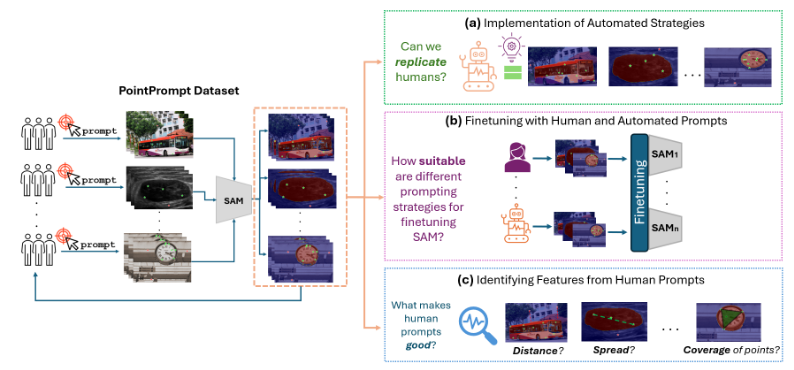

Benchmarking Human and Automated Prompting in the Segment Anything Model

Jorge Quesada*, Zoe Fowler*, Mohammad Alotaibi, Mohit Prabhushankar, Ghassan AlRegib (* equal contribution)

IEEE International Conference on Big Data 2024

We compare human-driven and automated prompting strategies in the Segment Anything Model (SAM). Through large-scale benchmarking, we identify prompting patterns that maximize segmentation accuracy across diverse visual domains.

Benchmarking Human and Automated Prompting in the Segment Anything Model

Jorge Quesada*, Zoe Fowler*, Mohammad Alotaibi, Mohit Prabhushankar, Ghassan AlRegib (* equal contribution)

IEEE International Conference on Big Data 2024

We compare human-driven and automated prompting strategies in the Segment Anything Model (SAM). Through large-scale benchmarking, we identify prompting patterns that maximize segmentation accuracy across diverse visual domains.

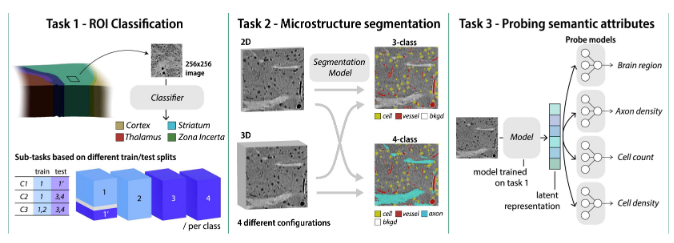

MTNeuro: A Benchmark for Evaluating Representations of Brain Structure Across Multiple Levels of Abstraction

Jorge Quesada, Lakshmi Sathidevi, Ran Liu, Nauman Ahad, Joy M. Jackson, Mehdi Azabou, Christopher Liding, Matthew Jin, Carolina Urzay, William Gray-Roncal, Erik Johnson, Eva Dyer

NeurIPS Datasets and Benchmarks Track 2022

We introduce MTNeuro, a multi-task neuroimaging benchmark built on volumetric, micrometer-resolution X-ray microtomography of mouse thalamocortical regions. The benchmark spans diverse prediction tasks—including brain-region classification and microstructure segmentation—and offers insights into the representation capabilities of supervised and self-supervised models across multiple abstraction levels.

MTNeuro: A Benchmark for Evaluating Representations of Brain Structure Across Multiple Levels of Abstraction

Jorge Quesada, Lakshmi Sathidevi, Ran Liu, Nauman Ahad, Joy M. Jackson, Mehdi Azabou, Christopher Liding, Matthew Jin, Carolina Urzay, William Gray-Roncal, Erik Johnson, Eva Dyer

NeurIPS Datasets and Benchmarks Track 2022

We introduce MTNeuro, a multi-task neuroimaging benchmark built on volumetric, micrometer-resolution X-ray microtomography of mouse thalamocortical regions. The benchmark spans diverse prediction tasks—including brain-region classification and microstructure segmentation—and offers insights into the representation capabilities of supervised and self-supervised models across multiple abstraction levels.